bARefoot is a vibrotactile shoe prototype with high-frequency sensing and vibrotactile actuation. It closely synchronizes vibration with user actions to create virtual materials. bARefoot can be used to deepen the sense of immersion in VR by, for example, allowing users to probe the strength of a thin ice layer. It can be used in AR for augmenting existing materials with additional haptic cues, for example, to subtly indicate a desirable direction to walk towards or make running on artificial grounds more enjoyable. To enable experiencing a large variety of materials, we complement the design of bARefoot with a design tool for creating virtual materials called vibrAteRial. This design tool enables encoding haptics properties with a simple interface, and experience quickly any virtual material designed. You can find sources of the firmware here, and the design tool here.

2020

HapticsVirtual RealityDesign Tool

We experience the world through a sensorimotor loop

While stepping on various surfaces, we can experience these surfaces through mechanoreceptors in the skin. These receptors sense vibrations at a very high frequency. Based on the surface we are walking on, these receptors are stimulated differently, which create an haptic experience.

For instance, when walking on pebbles, the stones move under the feet and feel different than walking on dirt that is more compliant and uniform. Similarly, when walking on thin ice, one can experience small cracks as she is applying pressure on the surface. These cracks are the material response to the user actions.

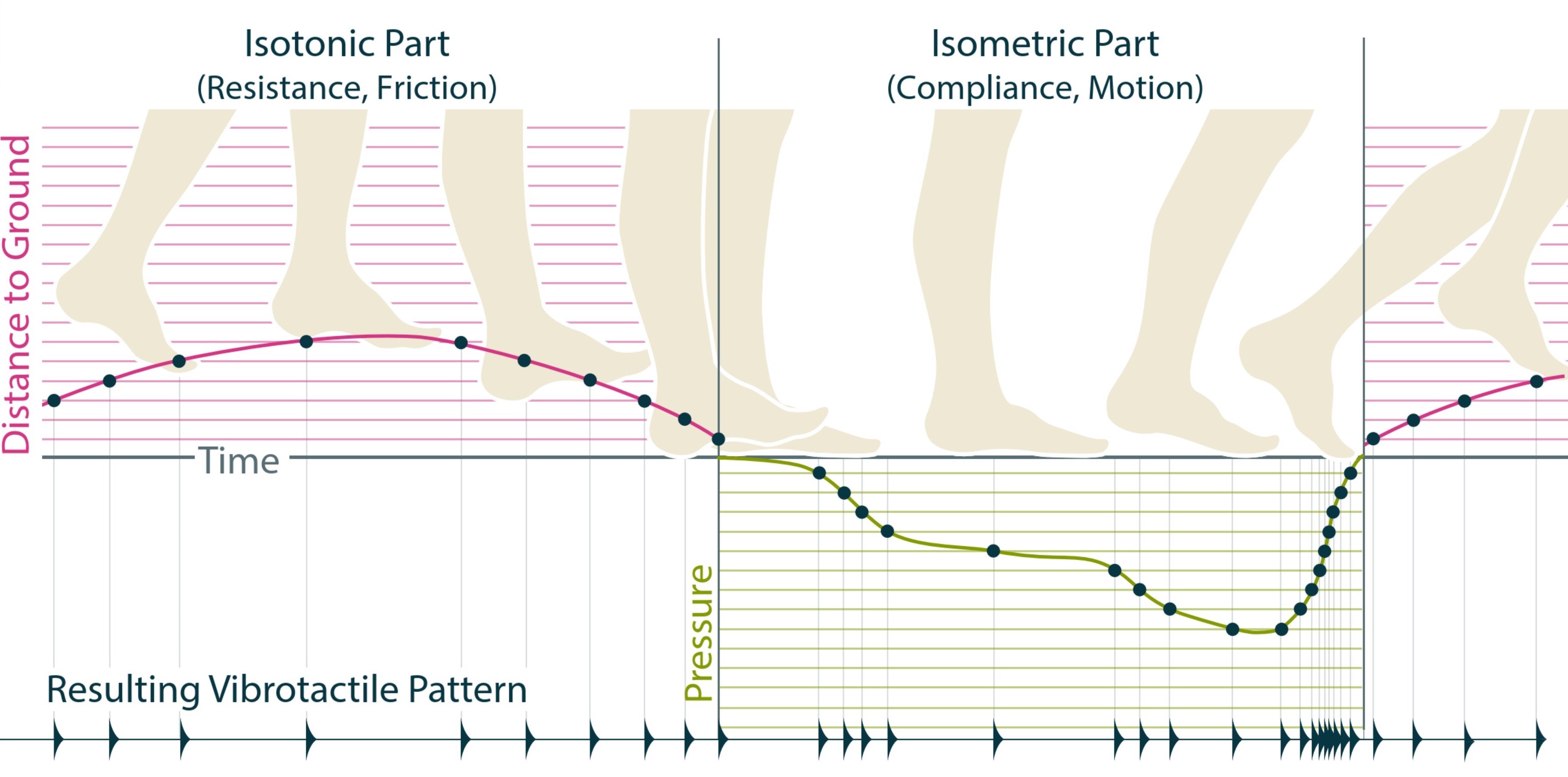

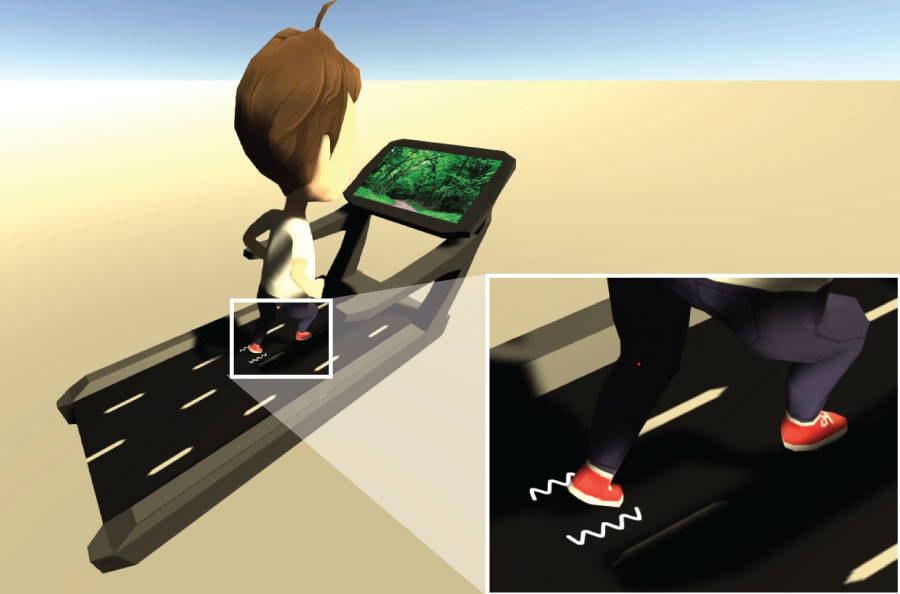

With bARefoot, we propose to create virtual materials that follow the same principles. We use motion coupled vibrations to create pulses at specific intervals. Consider the walking example depicted on the right. In the swing phase, the user can experience vibrations based on the foot's motion which can simulate grass hitting the shoe. In the stamp phase, the user applies pressure on the ground. Based on the level of pressure applied, the shoe pulses more or less. Keep in mind the example of the thin ice layer: the cracks experienced relate to the pressure applied on the surface.

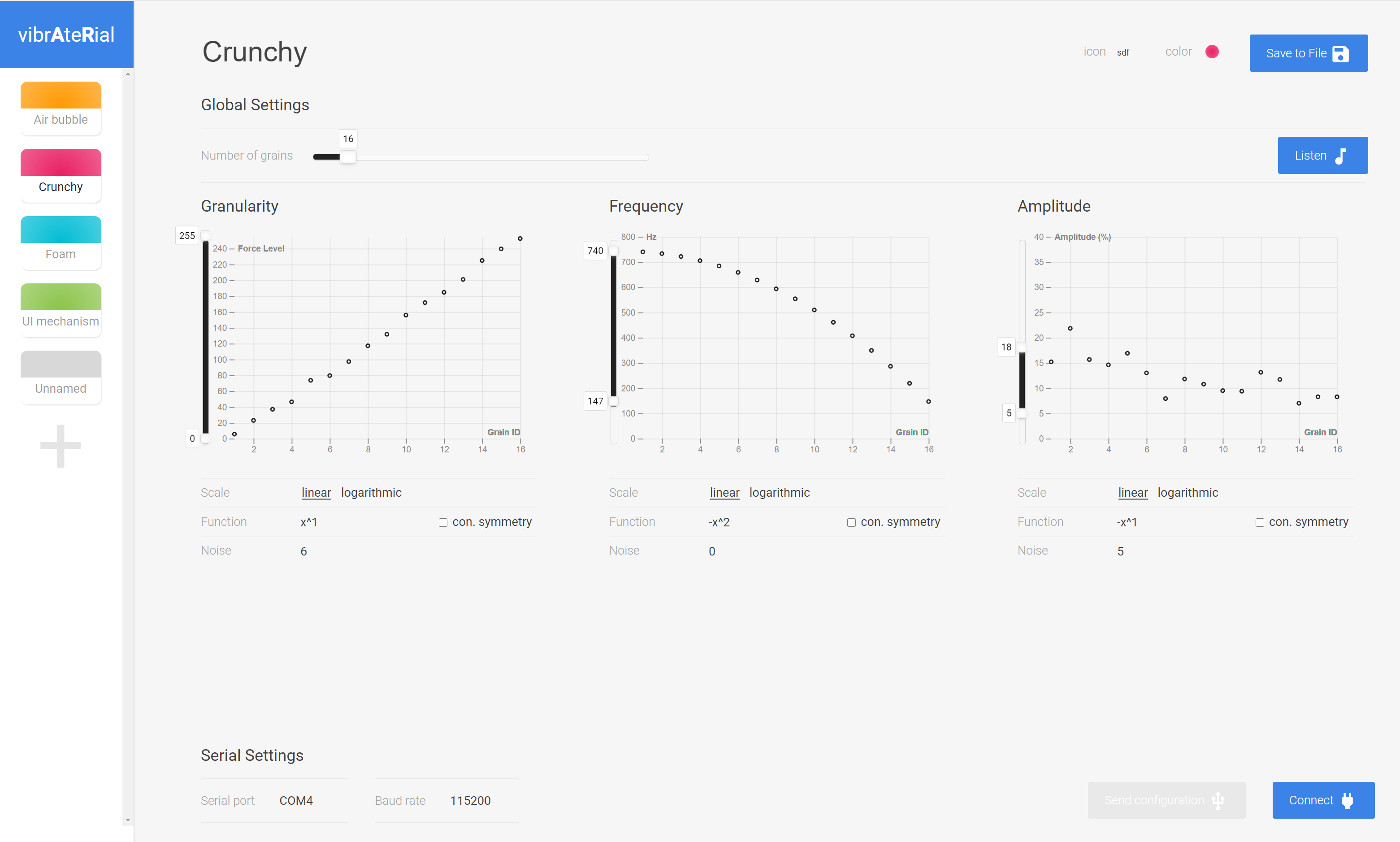

Each colored line in the image is called a grain. Changing the amount of grains used by a virtual material changes the haptic experience. Several other parameters can vary to change this experience like the frequency and amplitude of each grain (see below).

A shoe prototype using motion coupled vibrations

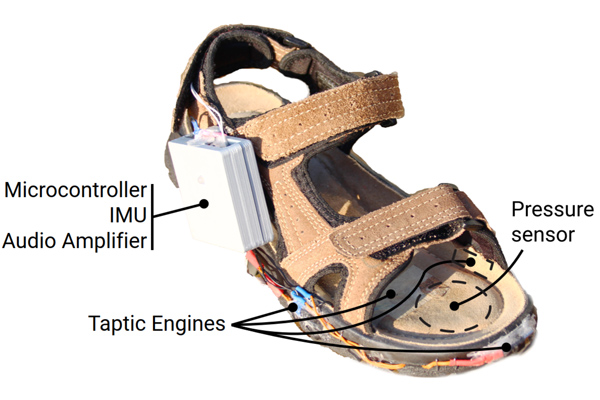

bARefoot is a shoe prototype augmented with Taptic engines (vibration motors), a pressure sensor under the sole to sense how much pressure the user is applying on the ground when walking or probing it, and an interital measurement unit that enables sensing foot's motions.

Based on the user's actions (i.e., walking or probing the ground), bARefoot plays pulses with different parameters. The configuration of these parameters, in other words the virtual material's configuration, can be changed over USB by connecting the shoe to a computer. This configuration can also change in realtime while the user is interacting in mixed reality by uploading the configurations through wi-fi. For instance, one can combine bARefoot with an HTC Vive setup and use a Vive Tracker on the foot to locate it in space, and update the configuration based on what surfaces the user is walking on.

Designing Virtual Material with vibrAteRial

Describing a haptic experience is a very challenging task, even for expert hapticians. Designing such experiences can also be very challenging: it requires users to understand the underlying concepts of these experiences.

With vibrAteRial we tried to simplify this task as much as possible by allowing any user to play with the four parameters defining the haptic experience: the number of grains, their granularity, and the frequency and amplitude of each grain. Changing the number of grains change how many pulses happen when the user is moving. The granularity set where the positions of grains over the range of motion.

Once a user is ready to experience the virtual material, she can simply upload its configuration to the shoe connected in USB and start walking or probing the ground. Materials can be saved and shared to others easily, allowing others to experience the same materials remotely.

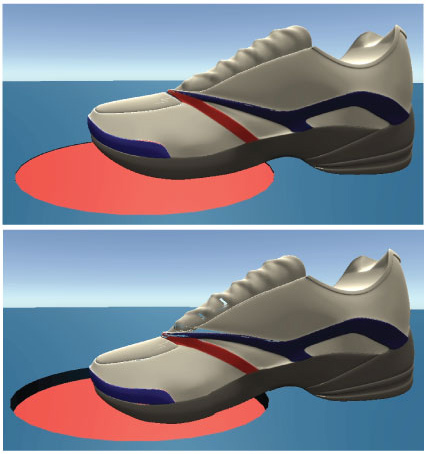

Enhancing immersion in virtual environments and augmenting reality with embodied experiences Using bARefoot, one can design novel haptic experiences for virtual reality. For instance, bARefoot can simulate compliant surfaces which can be used to design buttons controlled with the feet (see right-hand image). It also enables users to probe certain surfaces as we would do in the real world to see whether a surface is slippery, or it can sustain our weight. In a gaming scenario, the user could for instance walk on a wooden bridge and probe the next planks to see whether they will break under his weight (see image below). bARefoot can also be used in augmented reality scenarios to enhance an activity or provide embodied experiences. While running on a treadmill, bARefoot can simulate various ground surfaces that would represent the running path displayed on the treadmill's screen (see representative image below). One can also use the material experiences generated by bARefoot as means to convey subtle, non-disruptive information. For example, one could map information such as the remaining distance to walk to a given destination, or subtle navigation cues to the material experience of walking.

bARefoot: Generating Virtual Materials using Motion Coupled Vibration in Shoes Paul Strohmeier, Seref Güngör, Luis Herres, Dennis Gudea, Bruno Fruchard, Jürgen Steimle UIST'20: ACM Symposium on User Interface Software and Technology, pp.13