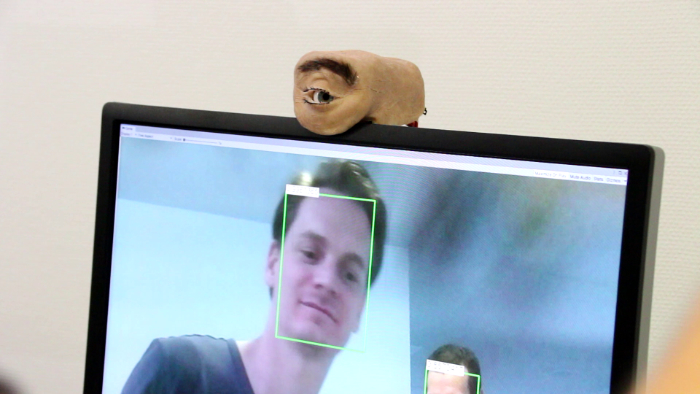

Sensing devices are everywhere, up to the point where we become unaware of their presence. Eyecam is a critical design prototype exploring the potential futures of sensing devices. Eyecam is a webcam shaped like a human eye. It can see, blink, look around and observe you. In this project, we present speculative design scenarios with Eyecam to emphasize the sensing capabilities of current sensing devices and how they can be insidious or deceitful. Eyecam was designed and built by Marc Teyssier whose work is famous for augmenting digital devices with human-like behaviors (see also Skin-On Interfaces and MobiLimb).

2021

Critical DesignSpeculative DesignOpen Source

See also Markpad Polysense On-Body Sensors Tactjam

Eyecam: an Antropomorphic Webcam

Taking inspiration from critical design and anthropomorphic interfaces, Eyecam is a physical instantiation of the implications an exemplary sensing device can have for a human. To this end, Eyecam exaggerates concealed functionalities using anthropomorphic metaphors (e.g., field of view, capturing turned on). Modeled on the human eye morphology, Eyecam comprises an actuated eyeball with a pupil replaced with a camera, actuated eyelids and actuated eyebrows whose combined movements enable human-like behaviors. By making it physical, Eyecam makes abstract concepts more tangible and illustrative. The exaggeration of its appearance captures attention and triggers spontaneous reactions and critical reflections.

A Tool to Rethink our Relations with Sensing Devices

Our work falls in line with research efforts in HCI transcending mere usability of devices to encompass emotions, values and affects, and the increasing interest in ethical, social, and privacy issues with ubiquitous sensing devices. Yet, in contrast to prior work, we use anthropomorphic design to take a speculative turn, challenging the reader to imagine themselves interacting with Eyecam, actively contribute to the debate, and speculate and iterate on potential futures. To this end, Eyecam is a plug-and-play physical prototype that can be re-used, re-built, or re-purposed. We demonstrate the purpose of Eyecam with five provocative design fictions which explore different types of its behavior. These open up a debate on plausible and implausible ways future sensing devices might be designed.

Observer: Eyecam revealing (un)awareness

Standing in the middle of a room we might find no indicators of – for instance – a camera, or where it is placed, its direction and field of vision. Often, it is not even discernible whether and how a digital object at hand, e.g. smart speakers with built-in microphones, can sense. How might we make perceptible if and what it is sensing? For other types of sensors this might even be a greater challenge. For instance, motion sensing radar chips such as Google’s Soli, are not (yet) linked to a perceptually intuitive metaphor. How might we use existing metaphors that communicate what it is doing? For other sensing techniques, e.g., contact-based object recognition on interactive fabrics, the convenient form factor might support unobtrusiveness rather than awareness.

How might we make use of form, materiality, or aesthetics to foster privacy awareness?

Mediator: Eyecam Augmenting Communication

The ability to accurately infer a conversational partner’s thoughts or feelings (so-called empathic accuracy) as well as one’s reaction to them impacts how a relationship develops, whether we build trust or mistrust: both in the physical world and online. Yet, many important social cues are missing online. How might we bridge the gap between co-located and remote communication through behavioral devices? The transmission of more information (e.g. non-verbal cues such as gaze direction, heart beat, breath) that might otherwise pass unseen is an obvious solution, but comes with strings attached. Sharing physiological signals with a (remote) conversational partner can generate closeness and spark empathy, but might also create an image different of what the user might want to communicate about their state of mind. It remains an open question how much sensing is needed to create empathy and what is “too much”.

How might we strike a balance between communicative and intrusive?

Mirror: Eyecam as Self Reflection

Just like mirror, sensing devices can reflect what they are sensing at the moment, or what they have been sensing moments, days, or even years ago. Sensed data might lead us to adjust ourselves, e.g., to “sound more mature” or “look professional”, but can also promote awareness of ourselves and others, affirmation and connection and foster (self-)reflection. How might we anticipate changes in behavior or perception caused by sensing devices? Some changes of behavior or perception might resonate positively, some negatively; others might even be ethically questionable. To this end, there are different design options available for how information is sensed, processed, maybe abstracted or coded, and then feed back to the user. How might we balance accuracy and abstraction in sensed data? How a system approaches the different notions of opacity, transparency, and anonymity can influence what the user makes of this data and how it affects them.

How might we inform the user about what the system learned about them?

Agent: Eyecam as Anthropomorphic Incarnation

If we want sensing devices to become more proactive and autonomous, we would need to make design decisions about how much agency they should be granted. How might we let them decide on their own what and when to sense? Or should we? Sensing devices sharing our space would need to consider cultural social norms, including both verbal and non verbal communication cues. For instance, a smart speaker would ideally not interrupt human conversations; for an eye it would be polite to maintain eye contact. Users might start to rationalize its personality, affectionately bond with it or project existing prejudices. How might we avoid to embody stereotypes? With more agency, complexity increases. Hence, the definition of rule sets becomes both necessary and challenging (as greatly illustrated by Asimov’s Robot series).

How might we teach sensing devices to respect boundaries?

Eyecam: Revealing Relations between Humans and Sensing Devices through an Anthropomorphic Webcam Marc Teyssier, Marion Koelle, Paul Strohmeier, Bruno Fruchard, Jürgen Steimle CHI'21: Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems