Thumb-to-finger interactions leverage the thumb for precise, eyes-free input with high sensory bandwidth. While previous research explored gestures based on touch contact and finger movement on the skin, interactions leveraging depth such as pressure and hovering input are still under-investigated. MicroPress is a proof-of-concept device that can detect both, precise thumb pressure applied on the skin and hover distance between the thumb and the index finger. It builds on a wearable Inertial Measurement Units (IMUs) sensor array and a bi-directional RNN deep learning approach to enable fine-grained control while preserving the natural tactile feedback and touch of the skin. We present two interactive scenarios that pose challenges for real-time input which demonstrate the efficacy of MicroPress and we validate its design with a study involving eight participants. With short per-user calibration steps.

2022

Embodied InteractionMicrogesturesMachine Learning

See also BodyLoci On-Body Sensors BodyStylus Tactjam SoloFinger

MicroPress: a Proof-of-Concept for Detecting MicroGestures MicroPress is a proof-of-concept system that can detect pressure applied with the thumb at different locations on the index finger, and the hovering distance between the thumb fingertip and the index finger in real-time (see figure above). The system’s goal is to demonstrate the benefits and capabilities of the interaction technique through application example. Our implementation preserves the natural hand movement and sensory feedback of the skin by relying on IMU sensors placed on the three phalanges of the index finger, a small magnet placed on the thumbnail, and deep learning leveraging Bi-directional LSTM RNNs for times series data processing. We trained two deep learning models that work together: the first infers pressure and location, while the second infers distance. To the best of our knowledge, MicroPress is the first system to date to demonstrate the possibility to recognize pressure and hovering input for thumb-to-finger interactions.

Real-time Interactive Scenarios

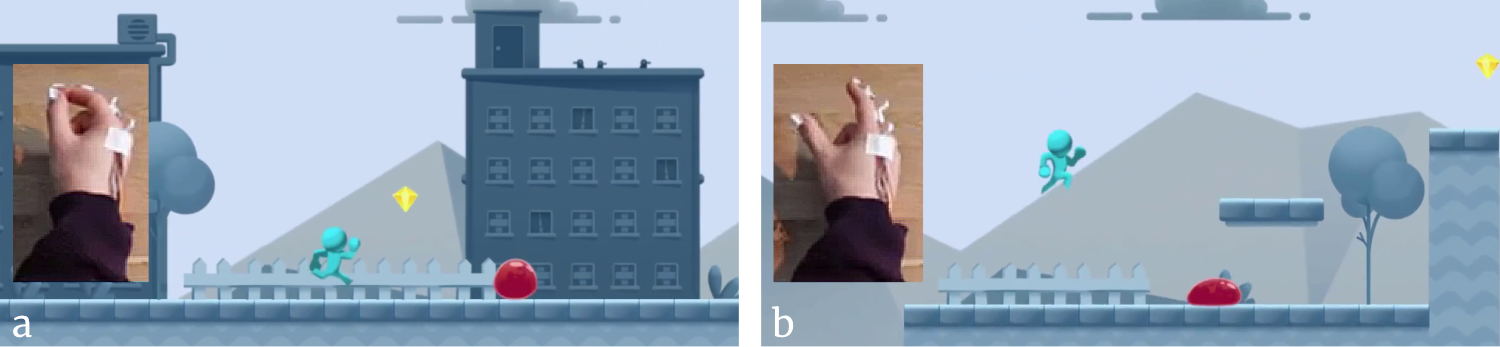

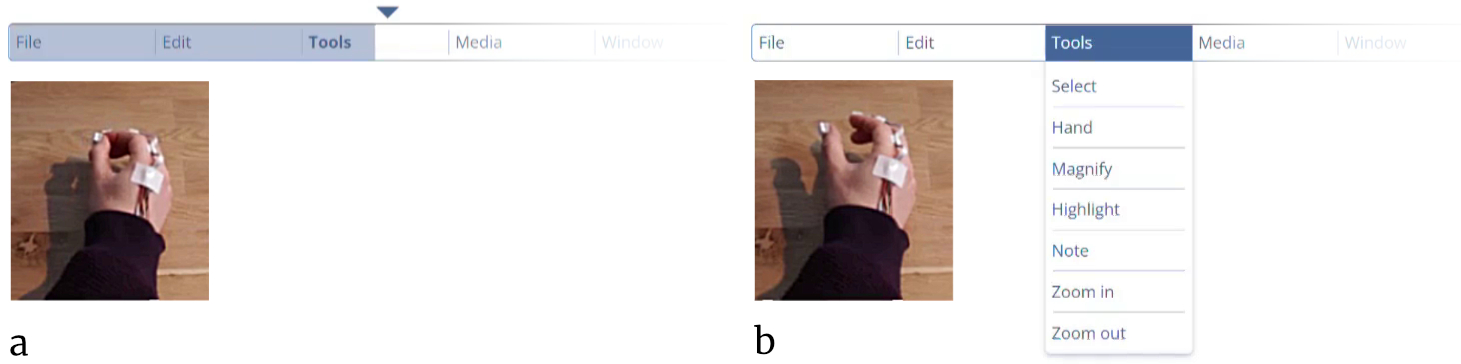

We present two interactive scenarios that demonstrate how users can leverage pressure and hover input in real-time. The first consists of a menu selection technique using pressure for targeting and hovering for validation. The second scenario consists of controlling the horizontal and vertical movements of a video game character in a 2D space with touch zones for directions and pressure for speed, and hover input for jumping. MicroPress supports low latency for comfort and accurate control in both scenarios.

In this scenario, the user controls a video game character in two dimensions (see Video Figure at [02:10]). The character moves horizontally at various speeds, and jumps at various heights. The user controls the direction of the lateral movements of the character by pressing two different touch zones. The level of pressure applied on each zone controls the movement speed of the character, with a running jump triggered at maximum pressure. Hover input controls the stationary jumping height with a linear function. This example leverages embodied interactions for real-time gaming, thus providing a new type of playful user experience. Mapping pressure to motion here creates an interesting relationship as both the user and the character share a physical effort. We used the Unity3D platform game template1 and connected the player controls to the MicroPress pipeline. Data was updated at 100 FPS.

This scenario presents a menu selection technique (see Video Figure at [01:40]) using a single touch zone on the thumb. The users control a slider with pressure to target a menu item (light blue bar on the right-hand figure). They can select an item (i.e. a menu root or an item in a menu) by dwelling on a target for 1s to select it. A selected item is indicated by an arrowhead above. The validation action consists of hovering above a threshold of 20mm for 1s to confirm a selection. A menu item is deselected after a short delay if no pressure was detected. We used a Node.js server to communicate between MicroPress and the React application with data updated at 100 FPS.

Evaluating the System We conducted a validation study to test the accuracy of MicroPress in real-time and to assess how the model generalizes to other hand geometries. We use a within-subject design including 8 participants that consists of two tasks: one to evaluate touch location and pressure accuracy, and the other to evaluate hover distance accuracy. In short, we found evidence that the system yields high normalized pressure accuracy for various users at six distinct locations on their index finger (6.71 ± 0.23% absolute error), as well as high hovering distance accuracy (0.57 ± 0.04mm absolute error). These results demonstrate that depth for thumb-to-finger interactions is a dimension that can extend touch design spaces. More details can be found in the paper.

MicroPress: Detecting Pressure and Hover Distance in Thumb-to-Finger Interactions Rhett Dobinson, Marc Teyssier, Jürgen Steimle, Bruno Fruchard SUI'22: Symposium on Spatial User Interaction