Using microgestures, prior work has successfully enabled gestural interactions while holding objects. Yet, these existing methods are prone to false activations caused by natural finger movements while holding or manipulating the object. We address this issue with SoloFinger, a novel concept that allows design of microgestures that are robust against movements that naturally occur during primary activities. Using a data-driven approach, we establish that single-finger movements are rare in everyday hand-object actions and infer a single-finger input technique resilient to false activation. We demonstrate this concept's robustness using a white-box classifier on a pre-existing dataset comprising 36 everyday hand-object actions. Our findings validate that simple SoloFinger gestures can relieve the need for complex finger configurations or delimiting gestures and that SoloFinger is applicable to diverse hand-object actions. Finally, we demonstrate SoloFinger's high performance on commodity hardware using random forest classification.

2021

Interaction DesignMicrogesturesMachine Learning

See also Markpad Side-Crossing Menus BodyLoci Micropress

SoloFinger: Single-Finger Grasping Microgestures

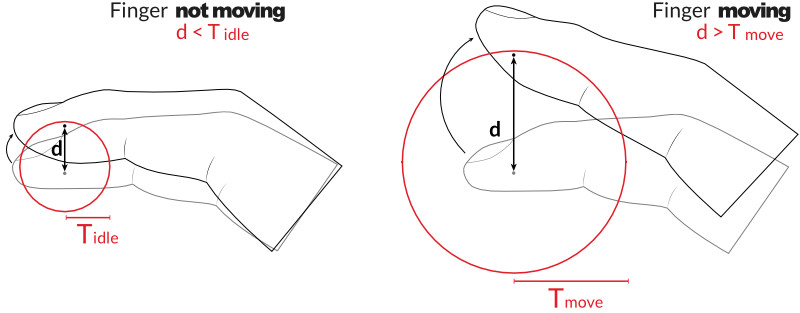

SoloFinger microgestures are conceptually based on the observation that during everyday hand-object interaction, multiple fingers tend to move concurrently, whereas it is rare that a single finger moves extensively on the object while all others stay idle. This observation was informed by findings that finger movements tend to be highly correlated during object manipulation. Our work leverages this phenomenon. We ground our findings on objects that do not contain movable elements, such as mechanical buttons or sliders.

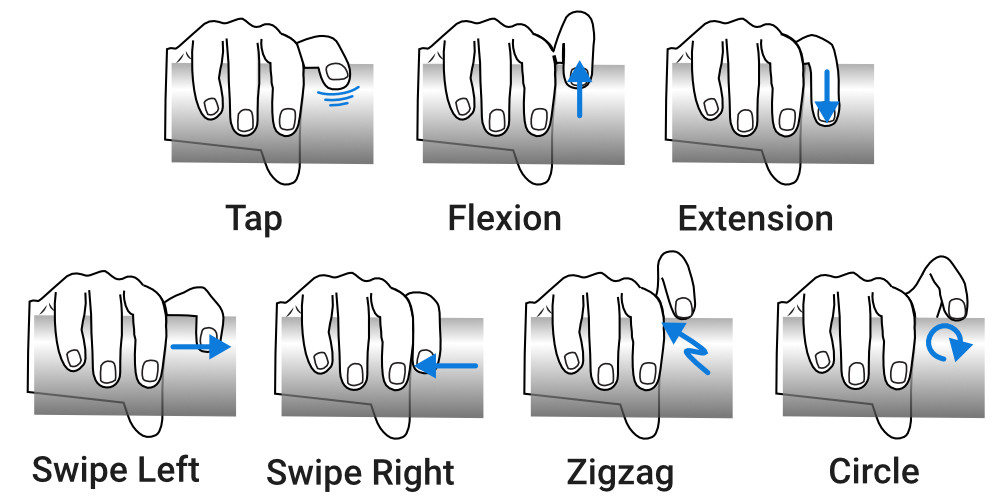

A SoloFinger gesture (see image below) involves moving a single finger by a considerable yet comfortable extent, while all other fingers remain static. It is performed while holding an object, with the same hand, and on the object itself. SoloFinger gestures are not limited to any specific finger. We recommend using the thumb, index, or middle finger, as the ring and pinky fingers were shown to be less robust and also subjectively less preferable

Validating the SoloFinger Concept with a White-Box Classifier

To validate this concept, we record the finger movements of 13 participants while grasping objects and performing everyday tasks (see image below). We recorded their finger movements with an Optitrack system and asked them to perform SoloFinger gestures as well as keep their hand idle.

We identify two major thresholds needed to avoid false activations and enable to recognize such gestures: a threshold for idle fingers and a threshold for moving fingers.

Our initial results show that for 36 hand-object actions, 23 do not produce false activations, and only 6 produce a significant amount (more details in the paper).

Proof-of-concept: Black-Box Classifier and VR Glove We use a random forest classifier provided by the Sci-kit Learn Python library, and the Hi5 VR glove (see image below). From our initial analysis, we learned that finger movements vary on different objects. Therefore, we performed evaluation in two conditions - training with and without action information. Thus, the classification task was to classify individual trials of all 9 classes (7 gestures + 1 static hold + 1 action without gesture) without action information for every participant. For the condition with action information, we trained models separately for all five actions. With action information, we do not observe false activations and the average accuracy is 89% between all gestures. Without action information, we observe false activations only in 2.12% of the trials (17 out of 800), and an average classification accuracy of 86%.

SoloFinger: Robust Microgestures while Grasping Everyday Objects Adwait Sharma, Michael A. Hedderich, Divyanshu Bhardwaj, Bruno Fruchard, Aditya Shekhar Nittala, Dietrich Klakow, Daniel Ashbrook, Jürgen Steimle CHI'21: Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems